Image generated with gpt4o

Image generated with gpt4o

Large-language-model (LLM) agents can now plan, reason and act beyond the bounds of a single prompt, but only when they can remember what happened five minutes—or five months—ago. Plain chat-history replay burns tokens, slows inference and still leaves the model groping for structure. Knowledge graphs (KGs) offer a principled alternative: a compact, queryable and semantically rich substrate that turns unstructured experiences into first-class, interconnected facts. This article explains what a knowledge graph is, why graph-based memory is emerging as the backbone of agentic LLM systems, and how real-time, temporal KGs such as Graphiti provide a ready-made toolkit. We cover core graph concepts, ingestion pipelines, retrieval patterns, performance trade-offs, integration recipes, open challenges and future directions. Throughout, concrete examples and citations to the latest open-source work ground the discussion in practice.

Table of contents

- Knowledge graphs in a nutshell

- Why LLMs need structured memory

- Agentic memory 101

- From tokens to triples: building the graph

- Querying for context

- Efficiency & scaling considerations

- Introducing Graphiti

- Graphiti in an LLM-agent pipeline

- Challenges and research frontiers

- Conclusion

1. Knowledge graphs in a nutshell

A knowledge graph is a network of entities (nodes) and relationships (edges) enriched with attributes, temporal qualifiers and, often, an explicit ontology. Where a relational database stores facts in rigid tables, a KG embraces heterogeneity: the same graph can link Ada Lovelace to analytical engine (invented-by), analytical engine to mechanical computer (is-a) and mechanical computer to computing history (topic-of) without schema contortions.

Three features distinguish KGs:

-

Semantic expressiveness – Every node and edge carries meaning through IRIs or property labels, allowing machines (and humans) to interpret paths.

-

Connectivity – The graph topology itself is information; shortest paths, centrality or motifs reveal implicit knowledge.

-

Extensibility – New node or edge types can be added without migrations.

Early web-scale KGs—Freebase, DBpedia, Google’s Knowledge Graph—powered search and question answering, but the idea predates them. RDF, OWL and property graphs (e.g., Neo4j) each embody the core triplet ⟨subject, predicate, object⟩.

In 2025, two broad trends have converged: (1) transformer models that can generate and consume triples in natural language (see this article in LangGraph docs), and (2) cheap, highly concurrent graph stores that can update millions of edges per second. Together they make it feasible to treat every conversation turn, document chunk or API response as a stream of semantic deltas appended to the agent’s personal knowledge graph.

What's a triple?

a triple is the fundamental unit of data and represents a single fact in the form of:

Subject <–> Predicate <–> Object

Each triple expresses a relationship between two entities or concepts:

- Subject: the entity being described (e.g., “Alice”) - This maps with entity in a graph database

- Predicate: the property or relationship (e.g., “worksAt”) - This maps with relation in a graph database

- Object: the value or another entity (e.g., “AcmeCorp”) - This maps with an atribute one of the two previous elements

Take this triple example:

Alice – worksAt – AcmeCorp

What means that Alice works at AcmeCorp.

This structure follows the Resource Description Framework (RDF) model and allows machines to interpret and query semantic relationships between data points. Triples are the building blocks of semantic reasoning, data integration, and graph-based AI.

2. Why LLMs need structured memory

LLMs shine at pattern completion but suffer from a finite context window and quadratic attention cost. When an agent must:

-

track long-running goals,

-

remember user preferences,

-

ground plans in external data, and

-

cite sources,

it needs memory that is persistent, filterable and explainable.

Limitations of plain text or vector memory

-

Token bloat – Dumping yesterday’s conversation into today’s prompt wastes context and money.

-

Opaque retrieval – Pure embedding search surfaces “similar” chunks, but misses multi-hop relations (“Who else worked with the user’s favorite collaborator?”).

-

Stale or duplicated facts – A vector store can hold conflicting passages with no easy way to reconcile.

Advantages of graph memory

-

Compactness – The same fact need only appear once; references are cheap edges.

-

Reasoning – Traversals and graph algorithms (e.g., path finding, subgraph-matching) expose chains of logic that the agent can cite.

-

Multimodal joins – Structured business data, web tables and conversational snippets co-exist.

-

Explainability – A subgraph can be rendered for debugging or shown to users.

Research such as the A-MEM framework formalises agentic memory, arguing that dynamically linked notes outperform flat stores on reasoning benchmarks.

3. Agentic memory 101

A practical taxonomy—adapted from cognitive psychology—splits memory into:

| Layer | Scope | Lifespan | Implementation hint |

|---|---|---|---|

| Working | Current reasoning chain | seconds-minutes | Scratchpad variables, prompt-in-subgraph |

| Episodic | What happened in each session | hours-days | Conversation nodes linked by occurred-at edges |

| Semantic | Consolidated facts | days-months | Entity nodes, typed relations, versioned attributes |

| Procedural | “How-to” skills | weeks-years | Function/plan nodes and success metrics |

An agentic system decides when to query or update each layer. Memory-related function calls might include graph.add_episode(event) or graph.query(context_query). The CoALA framework further observes that the KG mediates between short-term “scratch” memory and long-term storage, enabling iterative refinement.

4. From tokens to triples: building the graph

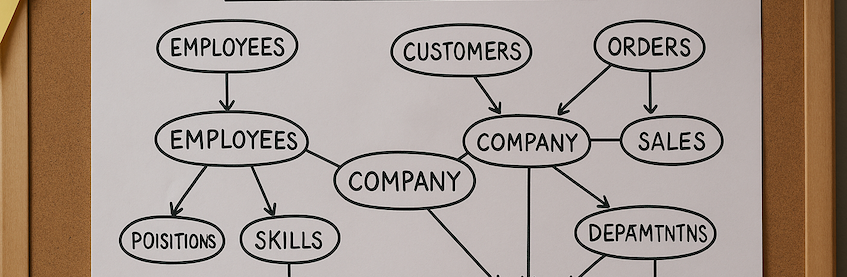

Converting a piece of text in a network-like data structure is not a straightforward task using traditional language processing algorithms, however LLM works very well for that structured as we can see in this example from the database vendor Node4j, it starts off from the text scratchpad and the model organizes its content in entities, relationships and attributes. An attribute can contain every type of information, from a simple numerical field to an image embedding. This graph builder is open source and works with many input unstructured formats (text, video, etc) and the most common used LLMs. If you are interesting in “magic” take a look at the core prompt, that basically hinges on 3 instruction set:

PROMPT_TEMPLATE_WITH_SCHEMA = (

"You are an expert in schema extraction, especially for extracting graph schema information from various formats."

"Generate the generalized graph schema based on input text. Identify key entities and their relationships and "

"provide a generalized label for the overall context"

"Schema representations formats can contain extra symbols, quotes, or comments. Ignore all that extra markup."

"Only return the string types for nodes and relationships. Don't return attributes."

)

PROMPT_TEMPLATE_WITHOUT_SCHEMA = ( """

You are an expert in schema extraction, especially in identifying node and relationship types from example texts.

Analyze the following text and extract only the types of entities (node types) and their relationship types.

Do not return specific instances or attributes — only abstract schema information.

Return the result in the following format:

{{"triplets": ["<NodeType1>-<RELATIONSHIP_TYPE>-><NodeType2>"]}}

For example, if the text says “John works at Microsoft”, the output should be:

{{"triplets": ["Person-WORKS_AT->Company"]}}"

"""

)

PROMPT_TEMPLATE_FOR_LOCAL_STORAGE = ("""

You are an expert in knowledge graph modeling.

The user will provide a JSON input with two keys:

- "nodes": a list of objects with "label" and "value" representing node types in the schema.

- "rels": a list of objects with "label" and "value" representing relationship types in the schema.

Your task:

1. Understand the meaning of each node and relationship label.

2. Use them to generate logical triplets in the format:

<NodeType1>-<RELATIONSHIP_TYPE>-><NodeType2>

3. Only return a JSON list of strings like:

["User-ANSWERED->Question", "Question-ACCEPTED->Answer"]

Make sure each triplet is semantically meaningful.

"""

4.1 Extraction pipeline

-

Segmentation – Break raw text (user message, API JSON, PDF page) into cognitively coherent chunks.

-

Entity recognition & normalisation – Use a small LLM or spaCy model to spot candidate entities; reconcile to existing nodes via embedding similarity or canonical keys (email, ISBN).

-

Relation extraction – Prompt an LLM with examples to output RDF-style triples.

-

Temporal stamping – Attach start_time, end_time properties; if unknown, default to ingested_at.

-

Validation & ranking – Cross-check against schema; discard low-confidence triples.

4.2 Incremental vs batch updates

Traditional knowledge-base population ran nightly ETL jobs. For agents interacting in real time, latency must drop to milliseconds. Frameworks such as Graphiti accept streaming deltas and atomically merge them into the underlying Neo4j store, avoiding expensive recomputation.

4.3 Handling change over time

“Bob lives in Paris” might become “Bob lives in Madrid.” Rather than overwrite, a temporal KG stores multiple lives_in edges with valid-time intervals; queries can ask as of July 22 2025 or current. Graphiti’s episode model encapsulates these temporal slices automatically.

5. Querying for context

An agent usually issues one of three query patterns:

-

Neighborhood-centric – “What do we know about Alice relevant to shipping?” → hop radius ≤2 around the Alice node, filtered by predicates in a shipping ontology.

-

Path-finding – “How is Project Zephyr connected to revenue targets?” → K-shortest paths with typed constraints.

-

Hybrid vector + graph – Embed the user’s latest utterance, find semantic neighbors, then expand to their structural neighbors for rich context.

Graph traversal returns a subgraph that is:

- Top-k nodes by relevance score,

- Pruned to a token budget,

- Serialised (e.g., as JSON-LD) and injected into the LLM prompt under a KnowledgeGraphContext header.

Compared with naïve sliding-window history, a 2-hop subgraph of ~200 triples often fits within 4-6 % of GPT-4o’s 128 k-token limit while carrying more semantics. Empirical benchmarks inside Zep report latency under 50 ms for 50 k-edge graphs on commodity hardware.

6. Efficiency & scaling considerations

- Storage overhead – Property graphs compress well (integers for node IDs, dictionary-encoded properties). A ten-million-edge graph can sit in <4 GB with columnar or compressed-adjacency layouts.

- Write amplification – Frequent micro-updates risk contention; batched commits or log-structured storage mitigate this.

- Indexing – Graphiti auto-creates full-text indexes on node labels and edge types, and supports BM25 scoring for natural-language queries. The latest v0.17.x release adds filterable periodicity ranges.

- Distributed execution – For >100 M edges, sharding or scaling out to “fabric” modes of Neo4j/JanusGraph becomes necessary, but many agentic use-cases stay below that threshold.

- Cost vs vector search – Graph traversal is O(k) in neighbors, whereas vector similarity is O(log n) with HNSW; hybrid retrieval lets you trade one for the other. You can find a great tutorial of this kind of indices at Pinecone docs section for graph search.

7. Introducing Graphiti

Graphiti is an Apache-2.0, Python-based framework “for building and querying real-time, temporally-aware knowledge graphs tailored to AI agents” (14 k ⭐, July 22 2025).

7.1 Why Graphiti?

-

Temporal first-class citizen – Every edge carries valid_from / valid_to, enabling “time travel” queries.

-

Streaming ingest – An Episode API accepts lists of triples with optional embeddings; Graphiti merges, version-controls and indexes in one call.

-

Hybrid retrieval – Built-in vector store (PGVector, Weaviate or Qdrant) can be toggled on; cross-encoder reranker (OpenAI-based) boosts precision.

-

GraphQL-like query language – You can say:

MATCH (u:User {id:$uid})-[:PREFERS]->(p:Product)

WHERE edge.valid_at($ts)

RETURN p.name, p.price

-

Server or embedded modes – Run a Docker container (docker run -p 8000:8000 getzep/graphiti-server) or import graphiti_core directly in Python.

-

Ecosystem bridges – Adapters for LangGraph, LangChain, Autogen and OpenAI Function-Calling.

-

Observability – Prometheus metrics, OpenTelemetry traces and a React admin console.

7.2 Architecture snapshot

LLM agent ↔ MemoryManager

↙ ↘

Graphiti Core ⇆ Vector DB (optional)

↘ ↙

Neo4j 4.x backend

7.3 Road-map highlights (mid-2025)

- Edge-typed transformers – Fine-tuned to generate candidate relationships.

- Live graph summarisation – Automatic abstract-overviews fed back to the agent.

- RBAC – Per-user subgraph views for privacy.

Graphiti’s design goal is: low cognitive overhead for agent developers: one upsert_episode() call per turn deposits new knowledge; one query_subgraph() returns context. For hobbyists, this means you can bolt meaningful memory onto a ChatGPT plugin in an afternoon; for enterprises, it supports streaming ingest from Kafka topics and back-pressure.

The official docs provide a ten-line Quickstart; the associated Zep SaaS wraps Graphiti as a “Context Engineering Platform” that auto-extracts entities from user calls.

8. Graphiti in an LLM-agent pipeline

from graphiti_core import Client

from my_agent import Agent # hypothetical

graph = Client(url="http://localhost:8000")

agent = Agent(

memory_retriever=lambda msg: graph.query_subgraph(

query_type="hybrid",

text=msg.text,

k=20,

now="2025-07-22T10:14:00Z"

),

memory_writer=lambda notes: graph.upsert_episode(

triples=notes.triples,

embeddings=notes.embs,

occurred_at=notes.when

)

)

-

User message arrives → agent calls memory_retriever, pulling a ~150-triple neighborhood.

-

LLM prompt = system primer + retrieved subgraph + current user instruction.

-

LLM outputs a response and a memory_write_plan (list of new/updated facts).

-

Memory writer converts the plan into RDF-like triples and sends them back via upsert_episode.

Sub-prompt example

__StartKnowledgeGraphContext__

Alice prefers_product→ Graphiti

Graphiti released_version “0.17.8” on 2025-07-22

...

__EndKnowledgeGraphContext__

The LLM can now reason: “Because Alice likes Graphiti and the latest release fixes bug X, suggest she upgrades.” Token count is kept to ~2 000 regardless of chat length.

Tip: Use separate retrieval prompts for intent classification vs fact weaving to avoid “knowledge graph prompt injection.” Graphiti lets you attach an Episode group label (e.g., INTENT) and filter queries accordingly.

The gains of using this graph are:

- Compressed context, you can achieve a x2 reduction in size

- Improved accuracy and recall (F1)

- Reduced latency (due to the reduction in tokens)

9. Challenges and research frontiers

-

Extraction reliability – LLM-based relation extraction is still noisy; active-learning loops and human feedback remain essential.

-

Logical consistency – Paradoxes or duplications lurk in merged graphs; constraint-solvers or SHACL validation can help.

-

Temporal reasoning – Query languages must handle Allen interval algebra (before, overlaps, during). Graphiti’s current valid_at filter is a start, but richer temporal joins are on the roadmap.

-

Privacy & security – A per-edge ACL might be required once personal data flows in.

-

Multimodal graphs – Images, audio and code snippets as first-class nodes need specialised embeddings and viewers.

-

Explainable reasoning – Surfacing a minimal proof graph to the user is an active research topic.

-

Edge-weight learning – Dynamic weighting of relationships (confidence, recency, sentiment) can guide traversal heuristics.

-

Inter-agent graph sharing – Federated or sharded KGs let multiple agents collaborate without leaking private context.

Academic efforts such as A-MEM and industry projects like LangGraph memory point toward hybrid systems where symbolic links and neural embeddings co-evolve. Expect further convergence with “retrieval-augmented generation 2.0,” where graphs provide the index and LLMs fill in the gaps.

10. Conclusion

Knowledge graphs transform ephemeral chat logs and scattered data silos into a living, navigable memory palace for LLM agents. By structuring experiences as nodes and edges—annotated with time and provenance—we gain compactness, retrieval speed and a foundation for explicit reasoning. Frameworks such as Graphiti make this shift accessible: install a Docker image, feed it triples, and watch your agent’s recall, consistency and cost profile improve. While challenges in extraction accuracy, temporal logic and privacy remain, the trajectory is clear: the next generation of AI assistants will not just predict text but build and traverse rich semantic graphs, much like humans chart mental maps of their world. 4